Stop ransomware attacks before they take hold

Reading Time: 4 minutes New Panzura Detect and Rescue provides near real-time ransomware detection and guided recovery…

Read MorePanzura Data Services gives you complete visibility, always-on governance, and real-time metadata access, all from one unified data management dashboard. Invest in efficiency — and save thousands of IT hours as a result.

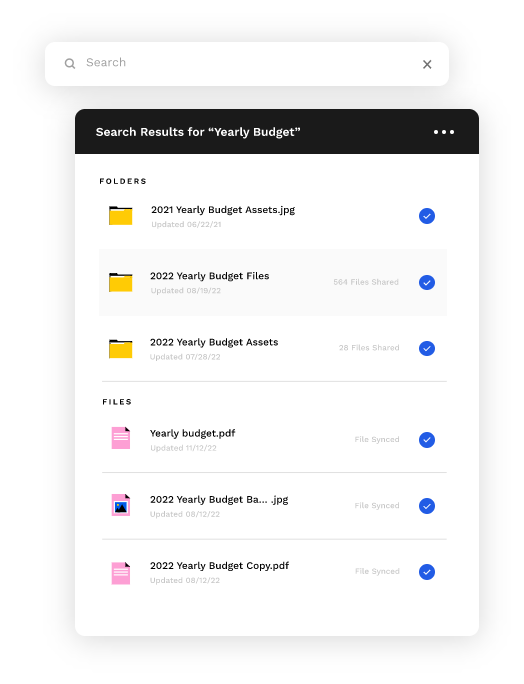

Rapidly ingest and query billions of files from multiple sources, giving you a powerful search engine to find anything in just moments.

Find any file, and save time, with our powerful, lightning-fast search that reaches across your entire global file system and integrates seamlessly with CloudFS, or other file sources such as NetApp, Isilon, or VNX for one-click search.

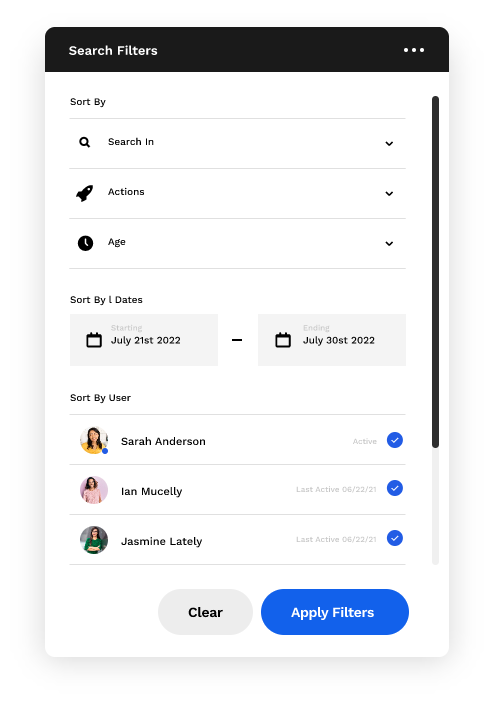

We take data governance seriously. So should you. Audit capabilities let you quickly and efficiently keep track of your data, find out what’s being done with it, and more. Powerful query and filtering capabilities let you track every action taken on your files as well as identify who did what, when. Search by date, user, and even file age (to name a few) for the view you need.

Get an inside view of your global file system, for security, governance, and administrative purposes.

Accidents happen, they just do. But what if it’s not an accident? Doesn’t matter. Data Services allows you to find deleted, moved or renamed files instantly and restore them to their original state, with just one click. Your data will always be available. Period.

— SENIOR EXECUTIVE, LEADING ENTERPRISE

You’re paying for storage so make sure you are maximizing your capacity. Our easy-to-use analytics tools allow you to see what’s being used, what’s not, what’s taking up space, and what’s being accessed the most by your users. This gives you full visibility into your storage, allowing you to get the information that will maximize efficiency.

Healthy IT systems are super important if you are going to maintain efficiency across your organization. Panzura lets you track active users, cache misses, access by location, and more. Create custom alerts that let you know the moment something happens.

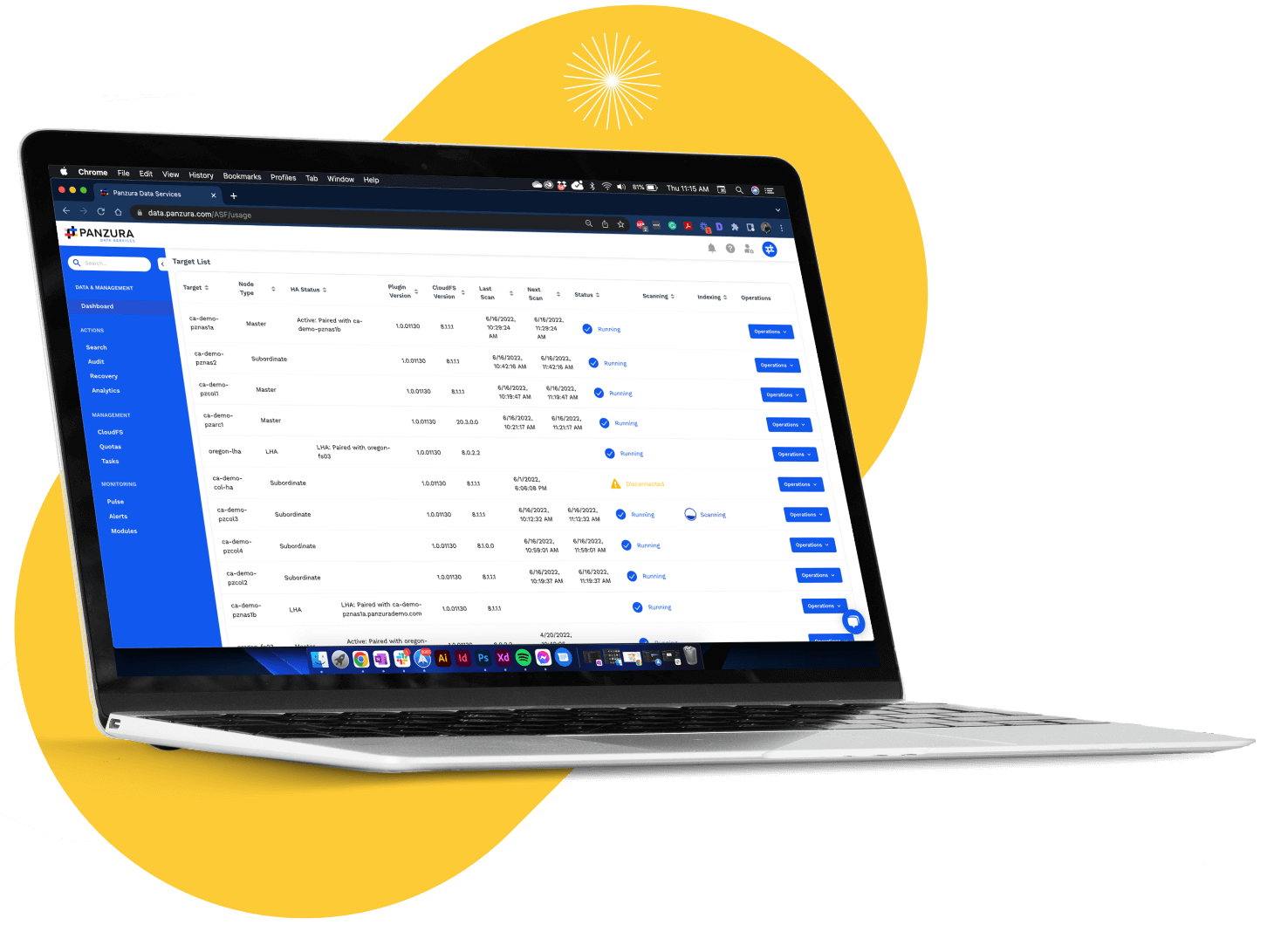

It’s almost impossible to manage what you can’t see. Data Services gives you essential visibility into your node inventories and their associated CloudFS deployments across your entire network.

Take control of your data and make it work for you. Data Services’ analytics dashboard makes it easy to see exactly what’s taking up space on all storage devices, and what’s redundant, obsolete, or trivial.